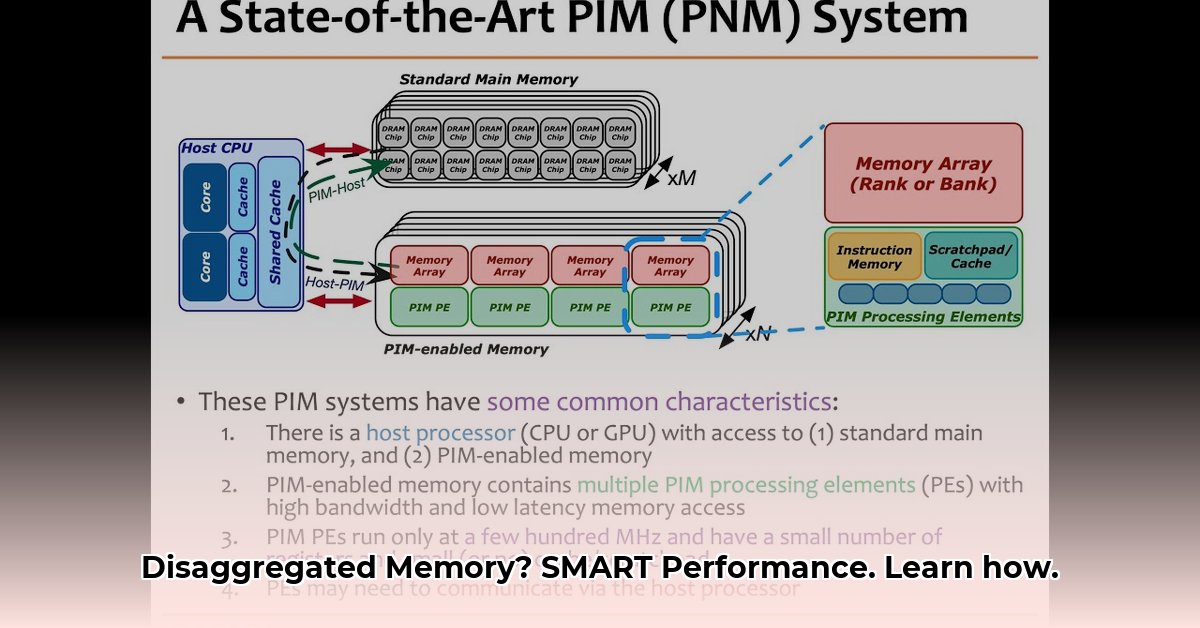

Introduction: Untangling the Disaggregated Memory Knot

Disaggregated memory, the concept of separating memory from compute resources, offers tantalizing possibilities for scalability and flexibility. However, accessing this remote memory efficiently presents a significant challenge. Traditional methods like Remote Direct Memory Access (RDMA) often create bottlenecks, hindering the technology’s potential. SMART (Scalable Memory Access Runtime), introduced at ASPLOS ’24, offers a solution. This comprehensive guide explores SMART’s architecture, benefits, implementation, and potential impact on the future of high-performance computing.

Decoding the Disaggregated Memory Dilemma

Imagine data scattered across numerous servers, like a library with books spread across multiple buildings. While offering advantages in terms of capacity and resource pooling, this distributed approach complicates data access. RDMA attempts to bridge the gap, but can lead to bottlenecks like “doorbell contention” (overloaded request queues) and “cache trashing” (inefficient cache utilization). These issues limit the effectiveness of disaggregated memory systems.

SMART Framework: An Architectural Overview

SMART improves upon one-sided RDMA verbs with a three-pronged approach:

-

Thread-Aware Resource Allocation: Like assigning specialized librarians to different library sections, SMART dedicates resources to individual threads. This minimizes contention and improves parallel processing.

-

Adaptive Work Request Throttling: Similar to a librarian managing the flow of requests, SMART dynamically adjusts the rate of RDMA operations. This prevents system overload and maintains consistent performance under varying workloads.

-

Conflict Avoidance: By anticipating data access patterns, SMART minimizes conflicts for shared resources, much like a librarian pre-fetching popular books. This reduces redundant operations and improves efficiency.

SMART’s Advantages: A Performance Boost

SMART’s design results in several key benefits:

- Improved Scalability: Thread-aware allocation allows applications to scale more effectively across multiple threads and utilize disaggregated memory resources more efficiently.

- Enhanced Performance: Adaptive throttling and conflict avoidance minimize bottlenecks, significantly improving throughput and reducing latency.

- Simplified Development: SMART’s asynchronous API simplifies integration with existing applications, reducing development time and effort.

Implementation Details: Integrating SMART

SMART involves a modification to the RDMA core library. The GitHub repository provides detailed instructions for building, installing, and integrating SMART into your application. Code examples illustrating API usage will be available soon.

Performance Evaluation: Benchmarking Results

The ASPLOS ’24 paper presents compelling performance results. Applications like RACE (a disaggregated hash table), FORD (a persistent transaction system), and Sherman (a disaggregated B+Tree) demonstrated significant improvements with SMART.

| Application | Improvement |

|---|---|

| RACE Hash Table | Up to 132.4x |

| FORD Transaction | Up to 5.2x |

| Sherman B+Tree | Up to 2x |

While these results are impressive, performance may vary depending on specific application workloads and hardware configurations.

Getting Started: A Practical Guide

The SMART GitHub repository will provide a step-by-step guide for obtaining, building, and integrating SMART. A simple example will demonstrate basic usage. While some technical expertise is required, the documentation aims to make the process as user-friendly as possible.

Comparing SMART: Alternative Approaches

Other solutions exist for managing disaggregated memory, but SMART’s combined approach of thread awareness, adaptive throttling, and conflict avoidance differentiates it and likely contributes to its superior performance in many scenarios. A detailed comparison will be available in future updates.

Future Directions and Limitations

SMART holds significant promise for various applications, including cloud computing, big data analytics, and machine learning. Ongoing research explores extending SMART’s capabilities to support a wider range of hardware platforms and optimizing its performance for emerging workloads. However, like any technology, SMART has limitations. Further research is needed to fully explore these limitations and develop potential mitigations.

Conclusion: A New Era for Disaggregated Memory

SMART offers a compelling approach to unlocking the potential of disaggregated memory. By tackling key performance bottlenecks, it paves the way for more efficient and scalable applications. While research continues, SMART represents a significant advancement in the field and suggests a bright future for high-performance computing in the age of disaggregated memory. Explore the SMART GitHub repository to learn more and contribute to this exciting project.

References

- ASPLOS ’24 Paper (link-to-paper)

- SMART GitHub Repository (link-to-github-repo)

(Note: Replace the placeholder links with the actual links to the GitHub repository and ASPLOS ’24 paper.)

- Top Rated Meditation Books to Deepen Your Practice - February 5, 2026

- Good Mindfulness Books For A Calmer, Happier Everyday Life - February 4, 2026

- Recommended Meditation Books for Beginners and Experienced Practitioners - February 3, 2026